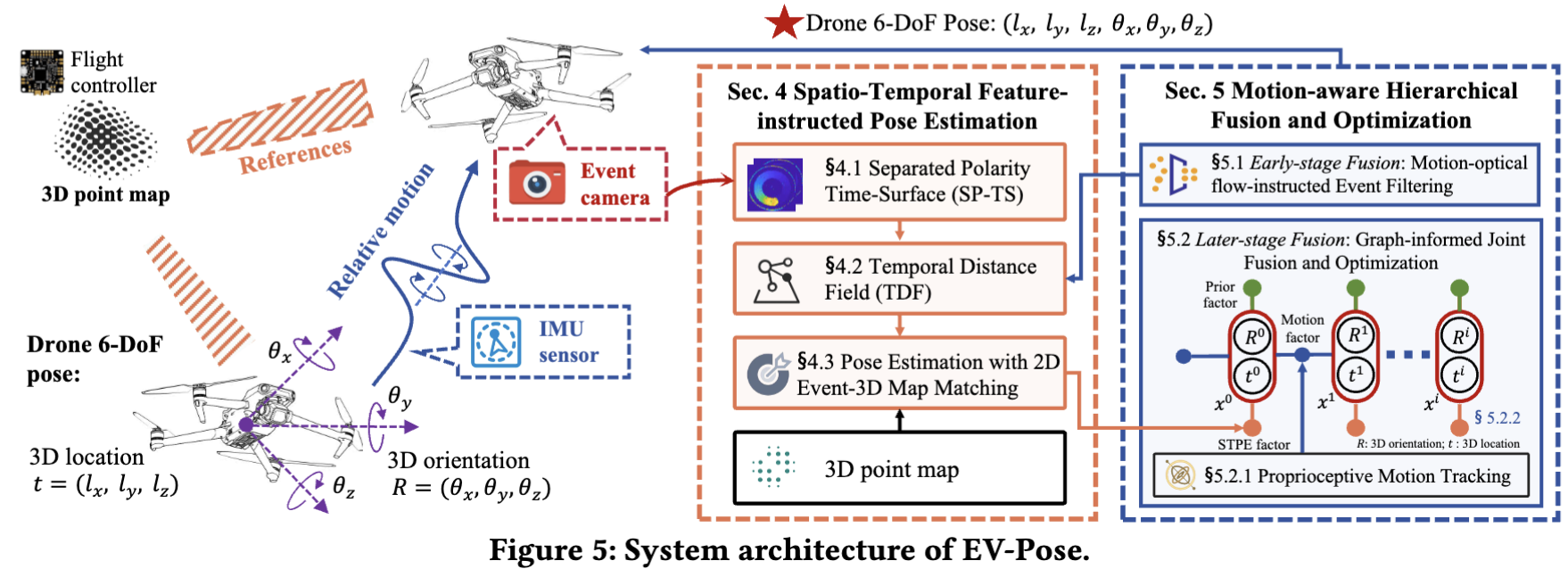

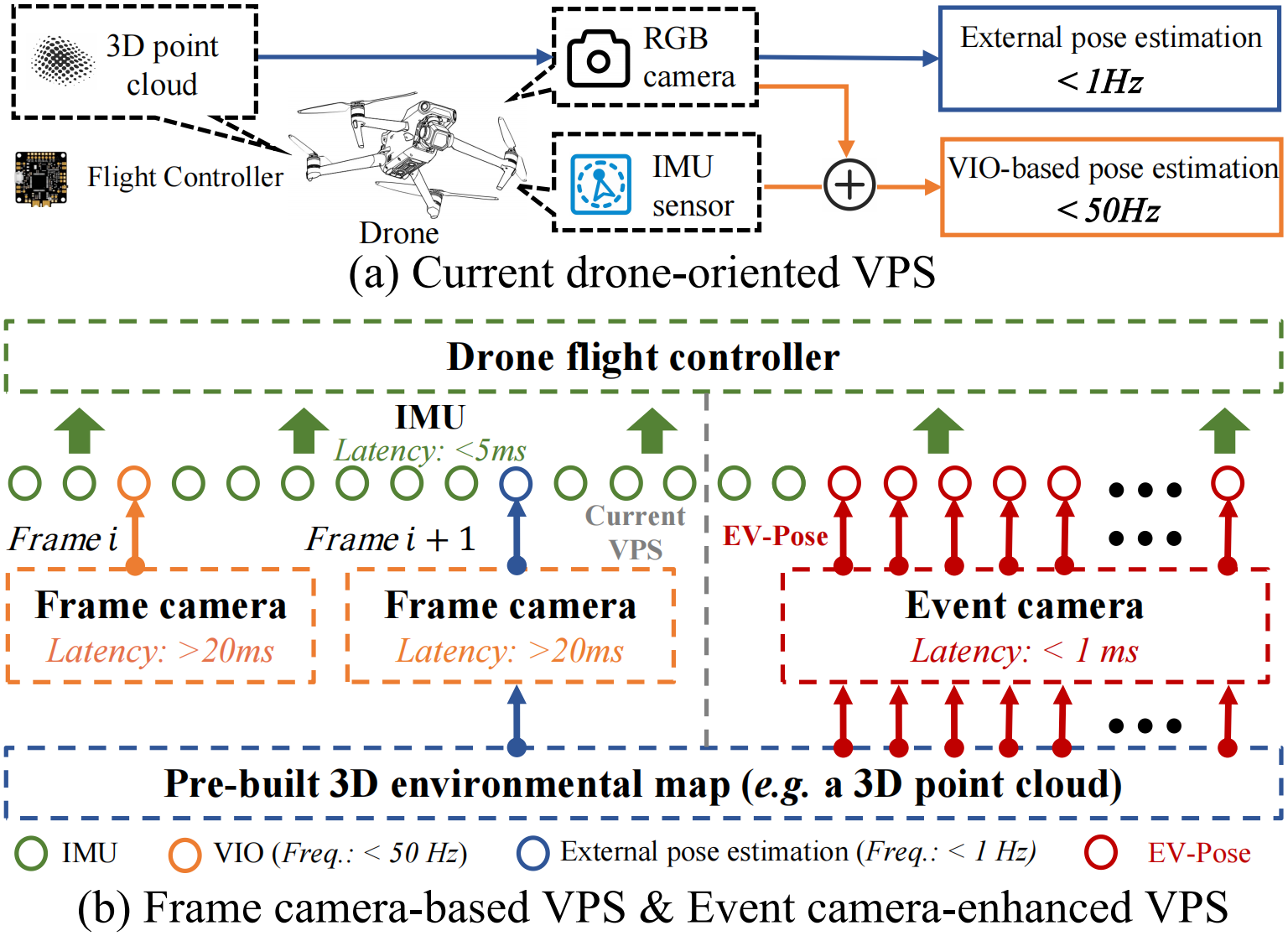

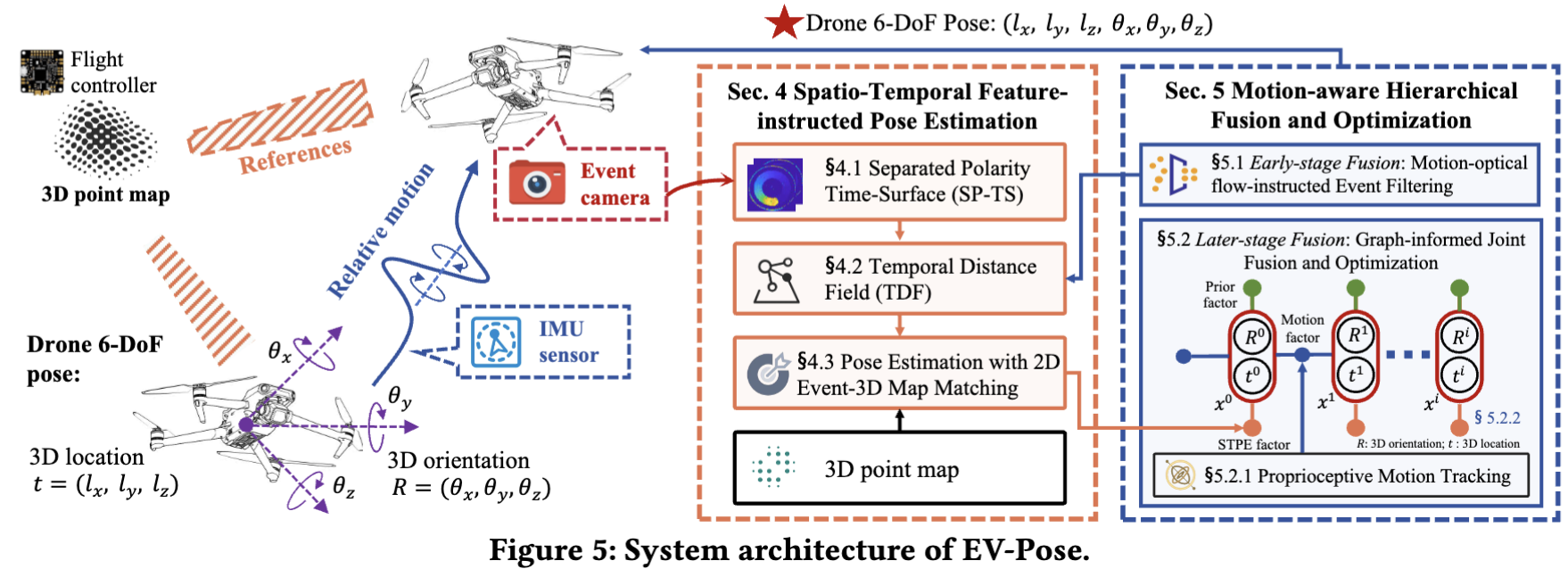

From a top-level perspective, we design EV-Pose, an event-based 6-DoF pose tracking system for drones that redesign current VPS with event camera.

EV-Pose leverages prior 3D point maps and temporal consistency between event camera and IMU to achieve accurate, low-latency drone 6-DoF pose tracking.

(i) Feature-instructed Pose Estimation (STPE) module (§ 4).

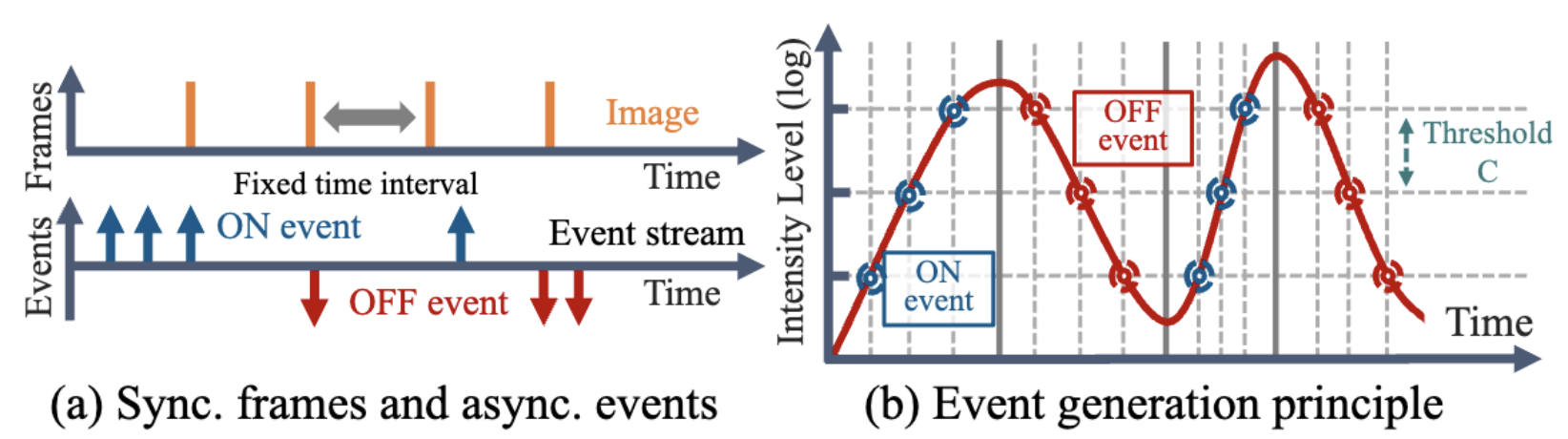

This module first introduces the concept of a separated polarity time-surface, a novel spatio-temporal representation for event streams (§4.1).

Subsequently, it leverages the temporal relationships among events encoded in the time-surface to generate a distance field, which is then used as a feature representation for the event stream (§4.2).

Finally, the 2D event-3D point map matching module models the drone pose estimation problem, which aligns the event stream’s distance field feature with the 3D point map, thus facilitating absolute pose estimation of the drone (§4.3).

(ii) Motion-aware Hierarchical Fusion and Optimization (MHFO) scheme (§5).

This scheme first introduces motion-optical flow-instructed event filtering (§5.1), which combines drone motion information with structural data from the 3D point map to predict event polarity and perform fine-grained event filtering.

This approach fuses event camera with IMU at the early stage of raw data processing, improving the efficiency of matching-based pose estimation.

This scheme then introduces a graph-informed joint fusion and optimization module (§5.2).

This module first infers the drone's relative motion through proprioceptive tracking and then uses a carefully designed factor graph to fuse these measurements with exteroceptive data from the STPF module.

This fusion, performed at the later stage of pose estimation, further improves the accuracy of matching-based pose estimation.

Relationship between STPE and MHFO.

STPE extracts a temporal distance field feature from the event stream and aligns it with a prior 3D point map to facilitate matching-based drone pose estimation.

To further enhance efficiency and accuracy of estimation, EV-Pose incorporates MHFO, which leverages drone motion information for early-stage event filtering, which reduces the number of events involved in matching, and later-stage pose optimization, which recovers scale and produces a 6-DoF trajectory with minimal drift.